VXLAN BGP EVPN - 1 - Underlay

Page content

In this series, I intend to provide the reader with all the tools needed to get started with labbing and learning VXLAN BGP EVPN.

Introduction

I will be using EVE-NG to simulate all the topologies in this series. This series will be extremely beneficial if you already have a theoretical understanding of VXLAN BGP EVPN. In this post, let’s concentrate on getting the underlay ready.

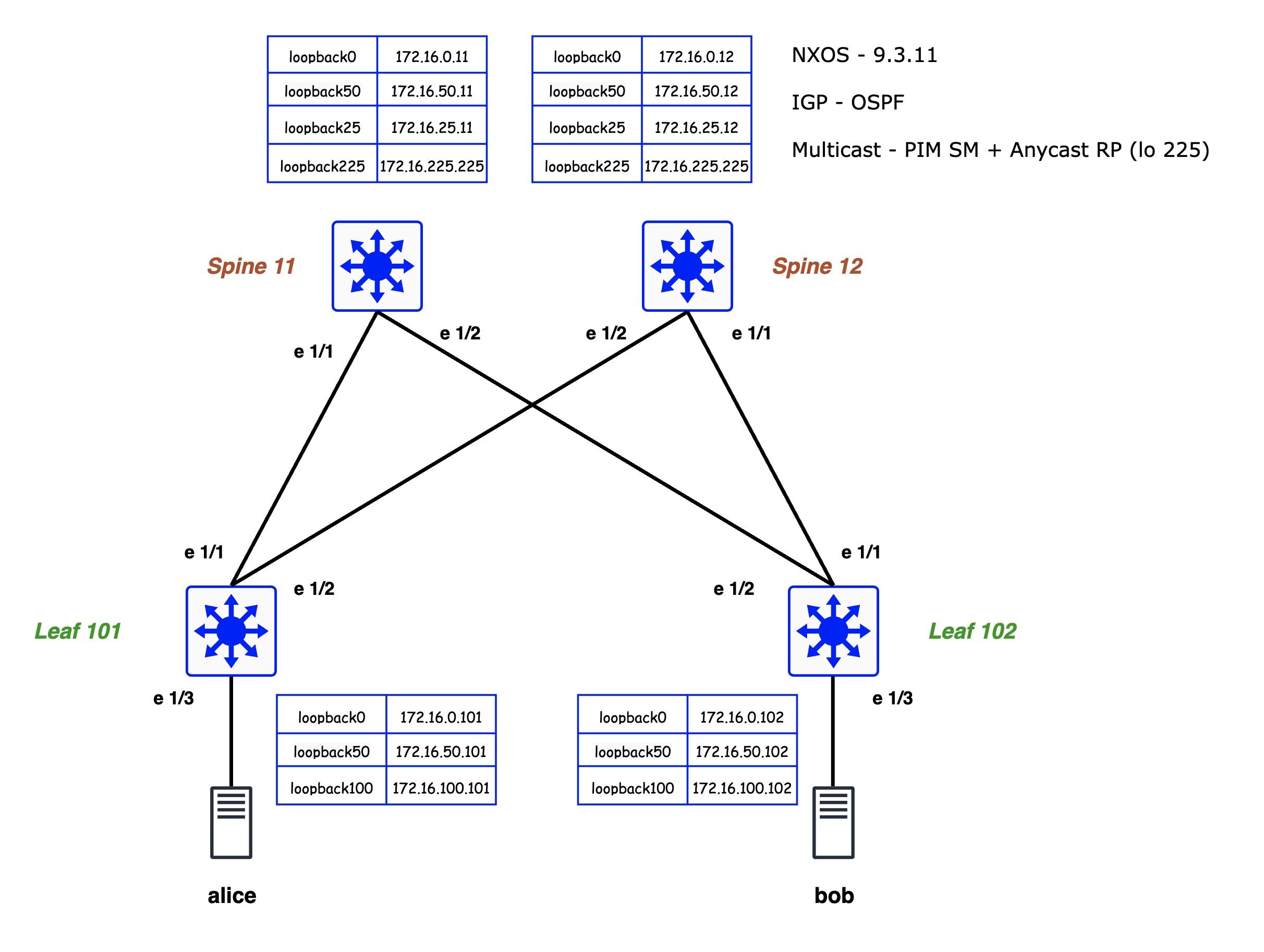

Topology

Configuring the IGP

- We will use OSPF for all the leaf-spine IP reachability.

- The NXOS configuration steps involve enabling the OSPF feature and then configuring the OSPF process.

- VXALN adds overhead to the L3 packet hence the underlay should allow more than 1500 MTU, preferably jumbo frames.

- We are using ip unnumbered so we don’t need to assign any IPs to the physical interfaces. For ex: We have IP on loopback0 and we will instruct the switch to borrow this IP for its physical interfaces e 1/1, e1/2.

Leaf 101

(use the below template to configure Leaf 102)

feature ospf

router ospf underlay-dc-delhi

router-id 172.16.0.101

int loopback0

description **underlay-router-ID/unnumbered**

ip address 172.16.0.101/32

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

int loopback50

description **Overlay - BGP Peering interface**

ip address 172.16.50.101/32

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

int loopback100

description **Overlay -VTEP NVE**

ip address 172.16.100.101/32

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

int eth1/1

description *To SPINE*

no switchport

medium p2p

ip unnumbered loopback0

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

mtu 9000

no shut

int eth1/2

description *To SPINE*

no switchport

medium p2p

ip unnumbered loopback0

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

mtu 9000

no shut

Spine 11

(use the below template to configure Spine 12)

feature ospf

router ospf underlay-dc-delhi

router-id 172.16.0.11

int loopback0

description **underlay-router-ID/unnumbered**

ip address 172.16.0.11/32

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

int loopback50

description **Overlay - BGP Peering interface**

ip address 172.16.50.11/32

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

int eth1/1

description **To leaf**

no switchport

medium p2p

ip unnumbered loopback0

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

mtu 9000

no shut

int eth1/2

description **To Leaf**

no switchport

medium p2p

ip unnumbered loopback0

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

mtu 9000

no shut

verification

- On leaf 101 we can see, the OSPF neighbor state is “full” to Spine-01 and Spine-02 since one link goes to each spine.

- Leaf 101 can reach routes on Leaf 102 using ECMP links to the spines

leaf-101# show ip ospf neighbors

OSPF Process ID underlay-dc-delhi VRF default

Total number of neighbors: 2

Neighbor ID Pri State Up Time Address Interface

172.16.0.11 1 FULL/ - 2d07h 172.16.0.11 Eth1/1

172.16.0.12 1 FULL/ - 2d07h 172.16.0.12 Eth1/2

leaf-101# show ip route ospf

IP Route Table for VRF "default"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

172.16.0.11/32, ubest/mbest: 1/0

*via 172.16.0.11, Eth1/1, [110/41], 2d07h, ospf-underlay-dc-delhi, intra

via 172.16.0.11, Eth1/1, [250/0], 2d07h, am

172.16.0.12/32, ubest/mbest: 1/0

*via 172.16.0.12, Eth1/2, [110/41], 2d07h, ospf-underlay-dc-delhi, intra

via 172.16.0.12, Eth1/2, [250/0], 2d07h, am

172.16.0.102/32, ubest/mbest: 2/0

*via 172.16.0.11, Eth1/1, [110/81], 2d07h, ospf-underlay-dc-delhi, intra

*via 172.16.0.12, Eth1/2, [110/81], 2d07h, ospf-underlay-dc-delhi, intra

172.16.50.11/32, ubest/mbest: 1/0

*via 172.16.0.11, Eth1/1, [110/41], 2d07h, ospf-underlay-dc-delhi, intra

172.16.50.12/32, ubest/mbest: 1/0

*via 172.16.0.12, Eth1/2, [110/41], 2d07h, ospf-underlay-dc-delhi, intra

172.16.50.102/32, ubest/mbest: 2/0

*via 172.16.0.11, Eth1/1, [110/81], 2d07h, ospf-underlay-dc-delhi, intra

*via 172.16.0.12, Eth1/2, [110/81], 2d07h, ospf-underlay-dc-delhi, intra

172.16.100.102/32, ubest/mbest: 2/0

*via 172.16.0.11, Eth1/1, [110/81], 2d07h, ospf-underlay-dc-delhi, intra

*via 172.16.0.12, Eth1/2, [110/81], 2d07h, ospf-underlay-dc-delhi, intra

Multicast and Rendezvous Points (RP)

- To propagate L2BUM across the fabric, we can use multicast or ingress replication.

- In VXLAN BGP EVPN, we have leaves that are both sources and receivers of l2BUM traffic. Every VNI is attached to a multicast group address, so every BUM announcement pertaining to this VNI is sent(received) to(on) this group.

- With Multicast, you come across preferably 2 designs - 1. Anycast RP 2. Phantom RP.

- Let’s set up Anycast RP in our topology.

- Both Spines have loopback 225 which is set up as Anycast RP. We also have loopback 25 on each spine that act as anycast RP members.

- Enable PIM sparse mode on L3 links in the underlay.

Leaf 101

(use the below template to configure Leaf 102)

feature pim

ip pim rp-address 172.16.225.225 group-list 224.0.0.0/4

int loopback0

ip pim sparse-mode

int loopback100

ip pim sparse-mode

int eth1/1-2

ip pim sparse-mode

Spine 11

(use the below template to configure Spine 12)

feature pim

int loopback25

description **Unique address -Anycast-RP address**

ip address 172.16.25.12/32

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

ip pim sparse-mode

int loopback225

description **Anycast-RP address**

ip address 172.16.225.225/32

ip router ospf underlay-dc-delhi area 0

ip ospf network point-to-point

ip pim sparse-mode

ip pim rp-address 172.16.225.225 group-list 224.0.0.0/4

ip pim anycast-rp 172.16.225.225 172.16.25.11

ip pim anycast-rp 172.16.225.225 172.16.25.12

int eth1/1-2

ip pim sparse-mode

verification

- Leaf 101 shows the RP = 172.16.225.225

- The route table shows the 2 paths to 172.16.225.225 via the 2 Spines.

- Spine switches show that they both are part of the same Anycast-RP cluster.

leaf-101# show ip pim rp

PIM RP Status Information for VRF "default"

BSR disabled

Auto-RP disabled

BSR RP Candidate policy: None

BSR RP policy: None

Auto-RP Announce policy: None

Auto-RP Discovery policy: None

RP: 172.16.225.225, (0),

uptime: 2d08h priority: 255,

RP-source: (local),

group ranges:

224.0.0.0/4

leaf-101# show ip route 172.16.225.225

IP Route Table for VRF "default"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

172.16.225.225/32, ubest/mbest: 2/0

*via 172.16.0.11, Eth1/1, [110/41], 2d07h, ospf-underlay-dc-delhi, intra

*via 172.16.0.12, Eth1/2, [110/41], 2d07h, ospf-underlay-dc-delhi, intra

spine-11# sh ip pim rp vrf default

PIM RP Status Information for VRF "default"

BSR disabled

Auto-RP disabled

BSR RP Candidate policy: None

BSR RP policy: None

Auto-RP Announce policy: None

Auto-RP Discovery policy: None

Anycast-RP 172.16.225.225 members:

172.16.25.11* 172.16.25.12

RP: 172.16.225.225*, (0),

uptime: 2d08h priority: 255,

RP-source: (local),

group ranges:

224.0.0.0/4

spine-12# sh ip pim rp vrf default

PIM RP Status Information for VRF "default"

BSR disabled

Auto-RP disabled

BSR RP Candidate policy: None

BSR RP policy: None

Auto-RP Announce policy: None

Auto-RP Discovery policy: None

Anycast-RP 172.16.225.225 members:

172.16.25.11 172.16.25.12*

RP: 172.16.225.225*, (0),

uptime: 2d08h priority: 255,

RP-source: (local),

group ranges:

224.0.0.0/4